18 Feb Formative assessments results are no slam-dunk

By Dale Chu

By all accounts, Saturday’s NBA Slam Dunk Contest was a dizzying display of gravity-defying athleticism. Even for the uninitiated, it was hard not to appreciate the performances brought to bear by the aerial artists who competed. Because the scoring for an event like this requires some degree of subjectivity, controversy can often ensue, which was the case in Chicago as fingers afterwards were quickly pointed at the judges’ table.

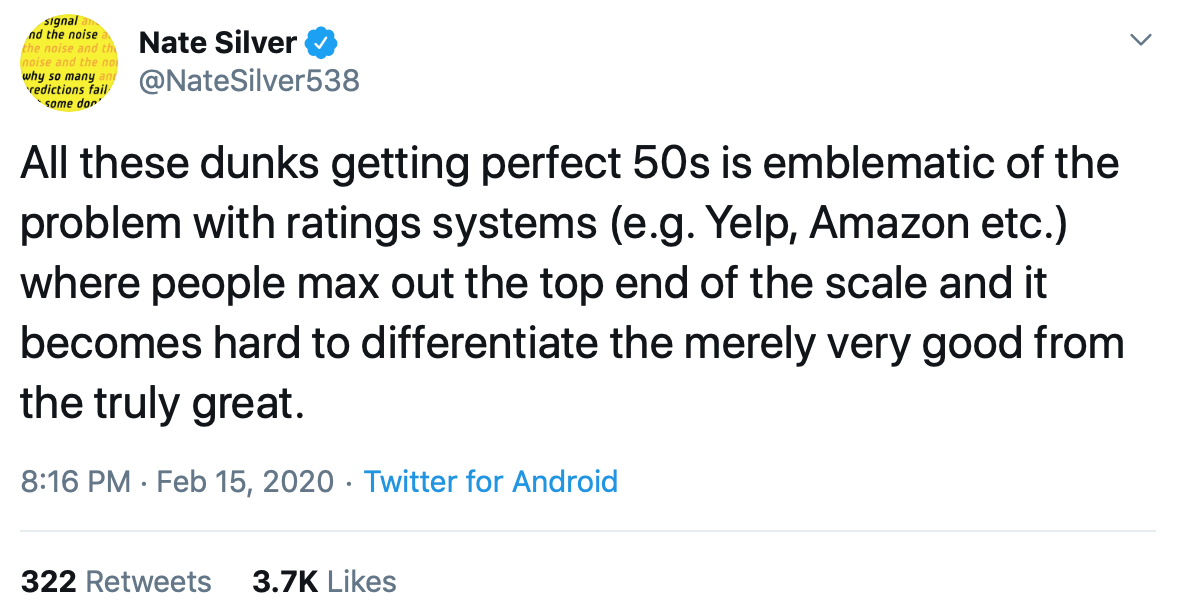

This is not a sports blog, but statistician Nate Silver made an observation about the contest that education advocates would do well to consider:

We see this kind of thing all the time in education vis-à-vis grade inflation. In K-12, it’s a pervasive and well-documented phenomenon, especially at the high school level. From suspect graduation rates to multiple valedictorians, getting an honest appraisal of student performance has become exceedingly difficult. The K-12 assessment world hasn’t been immune either.

Notably the honesty gap—the discrepancy between how proficiency is defined on the National Assessment of Education Progress (NAEP) and a state’s definition—remains an issue, though there are some signs that it’s getting better. But there’s another obstacle holding parents and other stakeholders from hearing the truth about how students are doing: a “district honesty gap” that represents the delta between how students fare on their annual state assessment and the progress monitoring tools (i.e., formative and interim assessments) employed throughout the year by schools and states across the country. In an upcoming post, I plan on delving deeper into this second honesty gap—how it manifests itself, its impact, and what states might do to address it.

No Comments